Automatic detection of facial landmarks in paediatric CT scans using a convolutional neural network

Aaron Rankin,

United Kingdom

PD-0069

Abstract

Automatic detection of facial landmarks in paediatric CT scans using a convolutional neural network

Authors: Aaron Rankin1, Edward Henderson2, Oliver Umney2, Abigail Bryce-Atkinson2, Andrew Green3, Eliana Vásquez Osorio2

1University of Manchester, Division of Cancer Sciences, Manchester, United Kingdom; 2University of Manchester, Division of Cancer Sciences, School of Medical Sciences, Faculty of Biology, Medicine and Health, Manchester, United Kingdom; 3University of Manchester, Division of Cancer Sciences, School of Medical Sciences, Faculty of Biology, Medicine and Health, Derry, United Kingdom

Show Affiliations

Hide Affiliations

Purpose or Objective

In children receiving radiotherapy, damage to healthy tissue and bone structures can lead to facial asymmetries. Tools for automatic evaluation of bone structures on 3D images would be useful to monitor and quantify the development of facial asymmetry in these patients. Due to the lack of available data, there has been little research conducted to assess the performance of machine learning models using paediatric data. Novel approaches such as transfer learning can facilitate the translation of adult-trained models to paediatric data. Here, we investigate the performance of a convolutional neural network (CNN) using transfer learning to detect facial anatomical landmarks in 3D CT scans.

Material and Methods

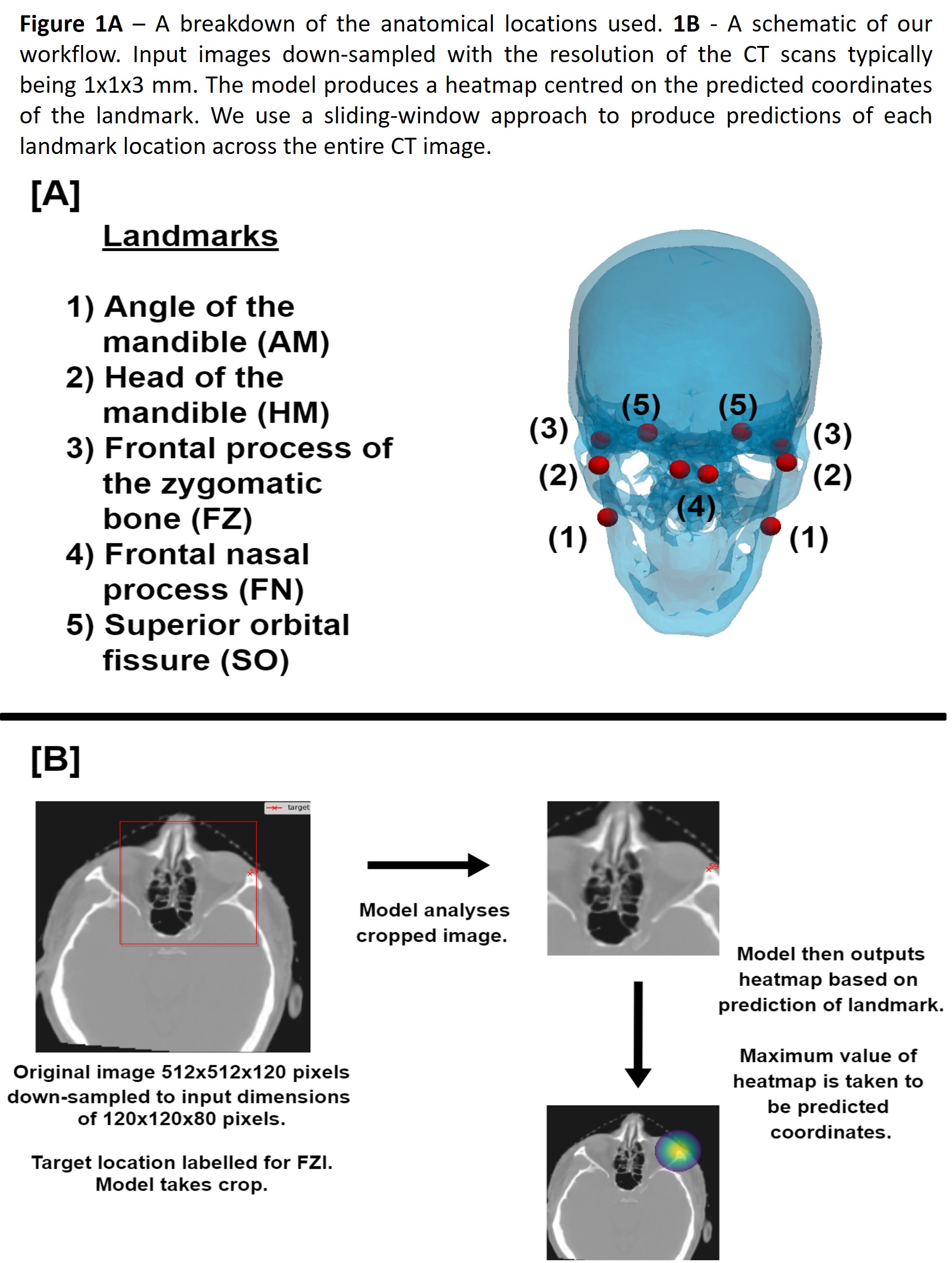

Facial

landmarks were selected through an extensive literature review, to contain both

left and right components with minimal anatomical differences between adult and

paediatric patients (fig 1A). A training dataset of 104 CT scans from adult

head and neck cancer patients was annotated by two observers. A random point

between the two annotations was used as a form of data augmentation and to

reduce the impact of interobserver (IOV) bias. The UNet-based CNN model was

trained for 100 epochs, using several commonly used data augmentation steps

(e.g., translational shifts and left-right mirroring). A heatmap was produced

by the network based on the confidence of the predicted landmark location. The

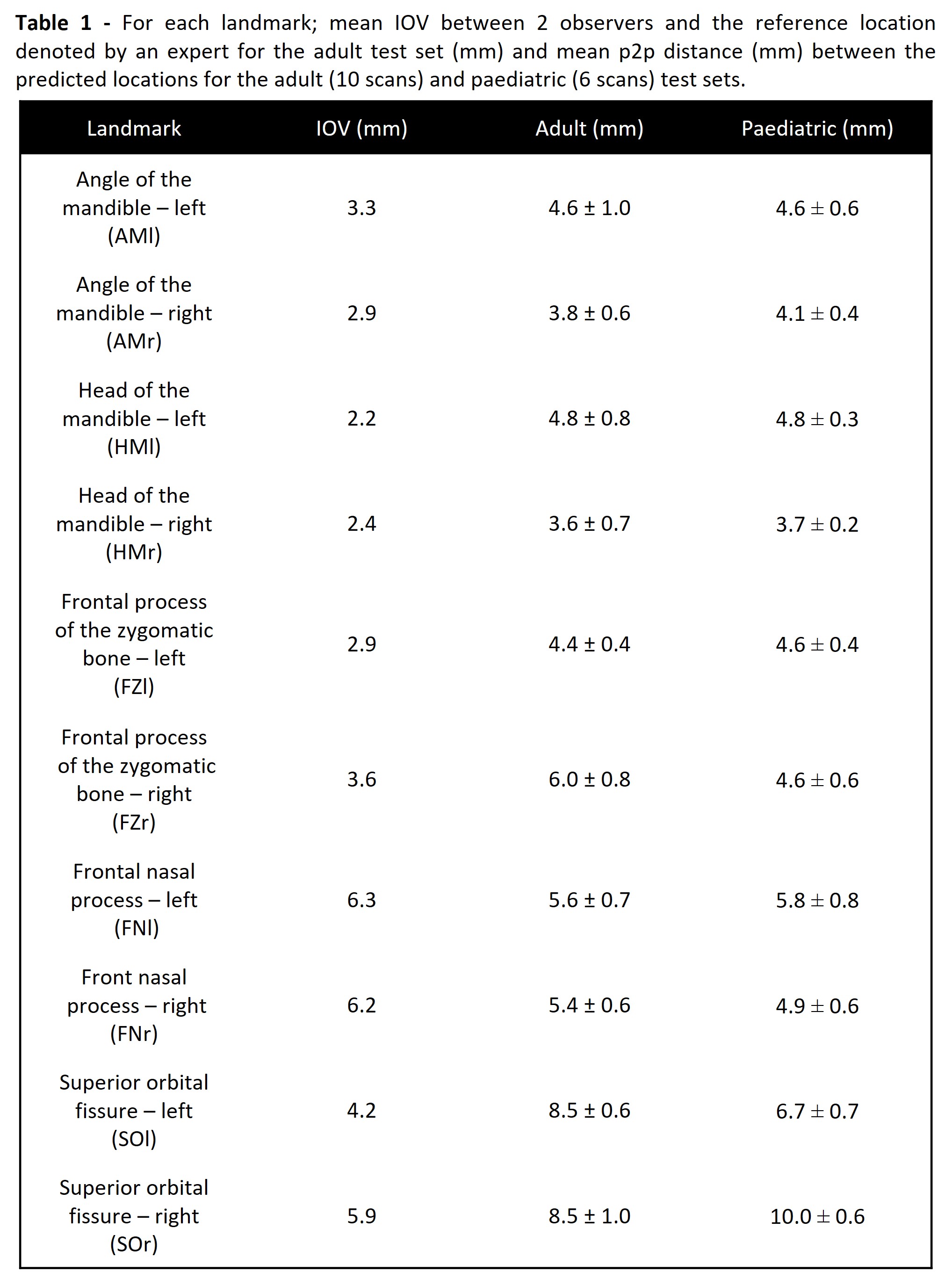

model was evaluated on a test set of 10 scans annotated by an expert. We

investigated the IOV between the locations selected by the two observers and

the expert (Table 1) to determine if this had a considerable effect on the

model’s performance.

The best performing adult model was fine-tuned using 10

paediatric scans for a further 100 epochs and then evaluated with 6 unseen

paediatric scans. All paediatric scans (age 4-17y) were annotated by an expert.

We quantified model performance measuring the 3D Euclidean point-to-point (p2p)

distance between the predicted and manual annotations for the best adult CNN

model and the final paediatric model.

Results

The average accuracy across all 10

landmarks on adult CT scans was 5.6 ± 0.8, comparable to the IOV limits of our

training data and resolution of the scans (Table 1). Following the adaption of

our model to paediatric data using transfer learning, the average p2p error was

5.4 ± 0.5 mm across all 10 landmarks, performing equally as well as in adult

data and showing similar variation in accuracy depending on the landmark

location (Table 1).

Conclusion

We developed a CNN model to locate

facial landmarks in adult and paediatric 3D CT images. We demonstrate that a

model trained with adult CT scans can be successfully adapted to locate

landmarks in paediatric images without a significant loss of accuracy using

transfer learning. Further work would involve using a bigger paediatric

dataset, including patients with facial asymmetry to explore the ability of the

model to quantify structural asymmetries in different areas of the face.