3DCT reconstruction from single X-ray projection using Deep Learning

MO-0215

Abstract

3DCT reconstruction from single X-ray projection using Deep Learning

Authors: Estelle Loyen1, Damien Dasnoy-Sumell1, Benoît Macq1

1Université Catholique de Louvain, Institute of Information and Communication Technologies, Electronics and Applied Mathematics, Louvain-la-Neuve, Belgium

Show Affiliations

Hide Affiliations

Purpose or Objective

The treatment of abdominal or thorax tumor is challenging in particle therapy because the respiratory motion induces a movement of the tumor. One current option to follow the breathing motion is to regularly acquire one (or two) X-ray projection(s) during the treatment, which does not give the full 3D anatomy. The purpose of this work is then to reconstruct a 3DCT image based on a single X-ray projection using a neural network.

Material and Methods

Three patients are considered in this study. The deep learning training uses patient-specific CT data to refer to individual features. For each patient, the learning dataset contains 260 3DCT images created by oversampling with random deformations of a planning 4DCT. Digitally reconstructed radiographs (DRRs) are generated from the input 3DCTs. A neural network is used to learn the mapping between the 3DCTs and the paired DRRs. This network, proposed by Henzler et al. in [1], consists of a deep CNN (dCNN) architecture composed of an encoder-decoder structure with skip connections. The learning set is split on 90/10 basis for the training and test sets. To localize the mispredicted regions, a non-rigid registration is applied between the predicted images and the ground truth ones.

An additional test set consisting of 11 specific images is also generated to better quantify the results. These 11 3DCTs are created at regular intervals between two breathing phases N and N+1 using the deformation field between both phases applied to the phase N. This results in a set of intermediate phases (e.g., phases N.1, N.2, N.3, …) for which the deformation relatively to the reference phase N is known in mm. The NRMSE can then be computed for each of the intermediate image with the reference phase N such that a NRSME can be linked to a deformation in mm.

Results

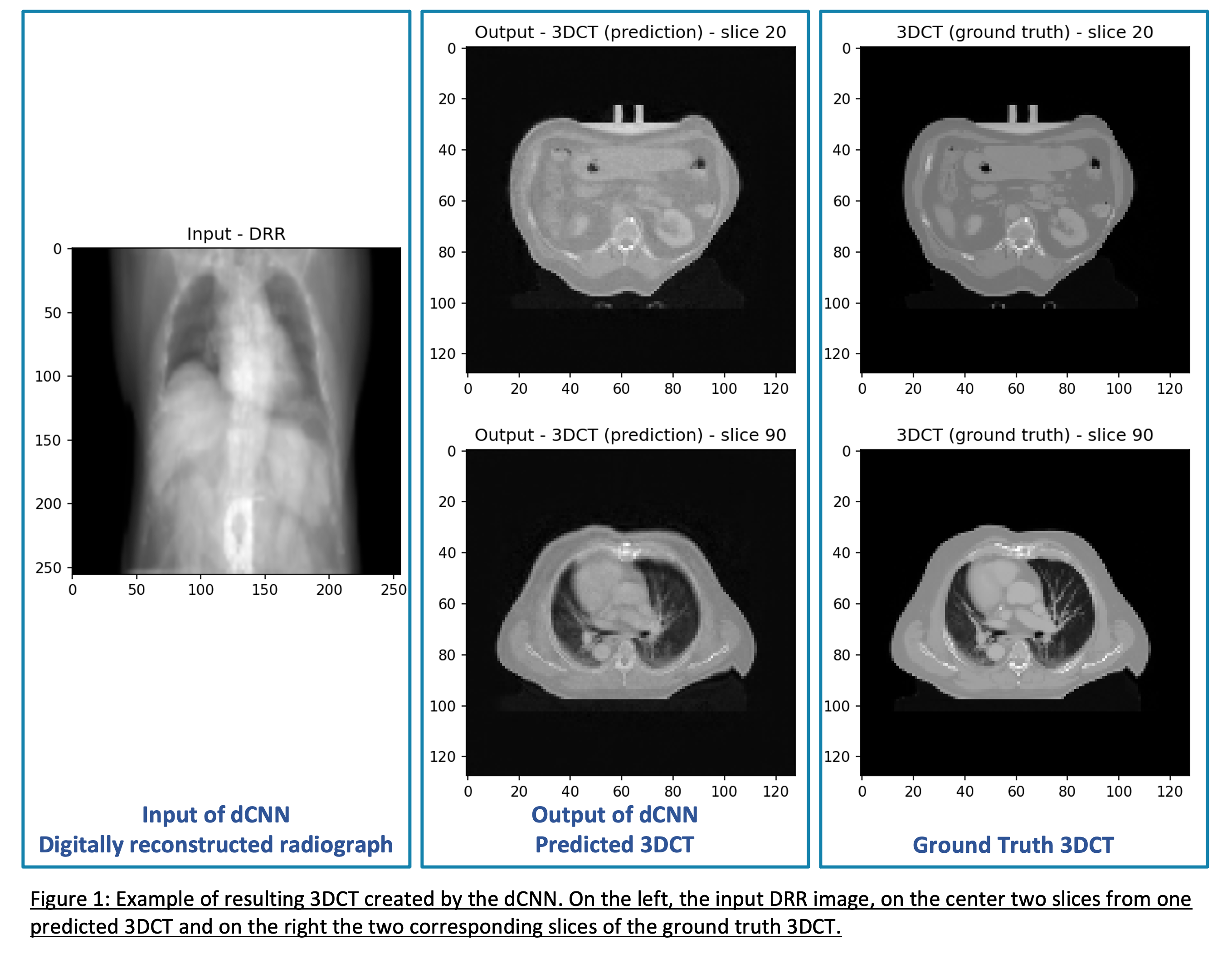

Applying the trained dCNN to one DRR of the test set takes less than a second. Figure 1 represents one DRR from the test set (Input of dCNN), two slices of the predicted 3DCT (Output of dCNN) compared with both ground truth slices. The computation of the NRMSE for each predicted 3DCT images of the additional test set shows that the further the intermediate phase is from the reference phase N, the higher the NRMSE. If the displacement between phases 2 and 3 is equal to 1 mm, the displacement predicted by the network will be slightly larger i.e., 1.26 +/- 0.015 mm. This corresponds to a NRMSE of 0.0459 +/- 0.01028 between phases 2 and 3 compared to a NRMSE of 0.0565 +/- 0.0059 for both phases predicted by the network.

Conclusion

The proposed method allows the reconstruction of a 3DCT image from a single DRR which can be used in real-time for a better tumor localization and improve treatment of mobile tumors.

[1] P. Henzler, V. Rasche, T. Ropinski, T. Ritschel, Single-image Tomography: 3D Volumes from 2D Cranial X-Rays. arXiv:1710.04867v3 [cs.GR] (2018) (available at: https://arxiv.org/abs/1710.04867)