Interactive deep-learning for tumour segmentation in head and neck cancer radiotherapy

OC-0119

Abstract

Interactive deep-learning for tumour segmentation in head and neck cancer radiotherapy

Authors: Zixiang Wei1, Jintao Ren1, Jesper Grau Eriksen2, Stine Sofia Korreman3, Jasper Albertus Nijkamp1

1Aarhus University, Department of Clinical Medicine - DCPT - Danish Center for Particle Therapy, Aarhus, Denmark; 2Aarhus University, Department of Clinical Medicine - Department of Experimental Clinical Oncology, Aarhus, Denmark; 3Aarhus University, Department of Clinical Medicine - The Department of Oncology, Aarhus, Denmark

Show Affiliations

Hide Affiliations

Purpose or Objective

With deep-learning, tumour (GTV) auto-segmentation has substantially been improved, but still substantial manual corrections are needed. With interactive deep-learning (iDL), manual corrections can be used to update a deep-learning tool while delineating to minimise the input to achieve acceptable segmentations. We developed an iDL tool for GTV segmentation that took annotated slices as input and simulated its performance on a head and neck cancer (HNC) dataset. We aimed to achieve clinically acceptable segmentations within five annotated slices.

Material and Methods

Multi-modal imaging data of 204 HNC patients with clinical tumour (GTVt) and lymph node (GTVn) delineations were randomly split into training (n=107), validation (n=22), test (n=24) and independent test (n=51) sets. We used 2D UNet++ as our convolutional neural network (CNN) architecture.

First, a baseline CNN was trained using the training and validation set. Subsequently, we simulated oncologist annotations on the test set by replacing a predicted tumour contour on selected slices with the ground truth contour. The simulations were used to optimise the iDL hyperparameters and to systematically assess how the selection of slices affected the segmentation accuracy. For each simulated patient, we started with the baseline CNN, meaning that iDL was only used for patient-specific optimisation. Subsequently, iDL performance was evaluated with simulations on the independent test set using the optimised hyperparameters and slice selection strategy. Finally, one radiation oncologist performed real-time iDL-supported GTVt segmentation on three cases.

For evaluation, dice similarity coefficient (DSC), mean surface distance (MSD), and 95% Hausdorff distance (HD95%) were assessed at baseline and after every iDL update. iDL re-training time was also assessed.

Results

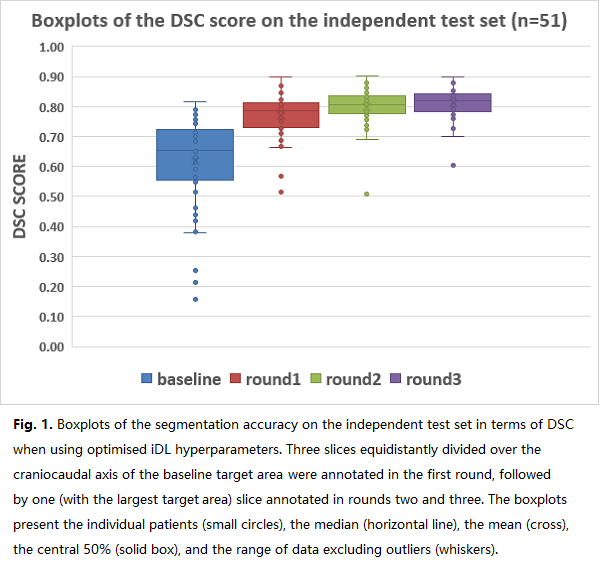

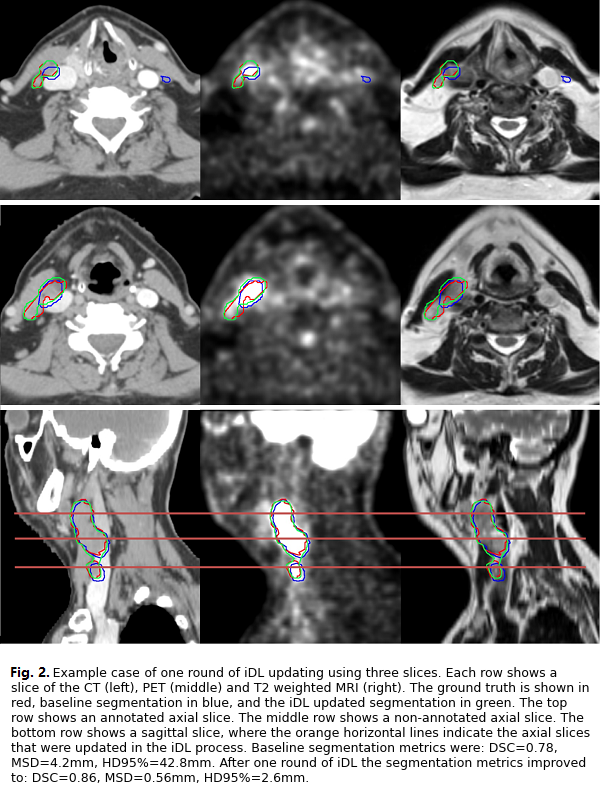

Median baseline segmentation accuracy on the independent test set was DSC=0.65, MSD=4.4mm, HD95%=27.3mm (Fig.1). The best performing slice selection strategy was first to annotate three slices, equidistantly divided over the craniocaudal axis of the baseline prediction, followed by two iDL rounds with one (largest target area) slice annotated. With this strategy, segmentation accuracy improved to DSC=0.82, MSD=1.4mm, and HD95%=8.9mm after only five slices annotated Fig.1). iDL retraining took 30 seconds per update. An example case is depicted in Fig. 2.

For real-time iDL, the radiation oncologist only used one round of iDL. For this, he annotated 16, 10, and seven slices for the first round of iDL per patient, respectively. After the update, three, one, and two slices were manually annotated to achieve clinically acceptable segmentations.

Conclusion

We presented a slice-based iDL segmentation tool which needs only limited input from observers. During the iDL simulation, annotating three to five slices substantially improved the segmentation accuracy. In real-time iDL, our tool was able to provide a satisfactory result after two rounds of annotation.