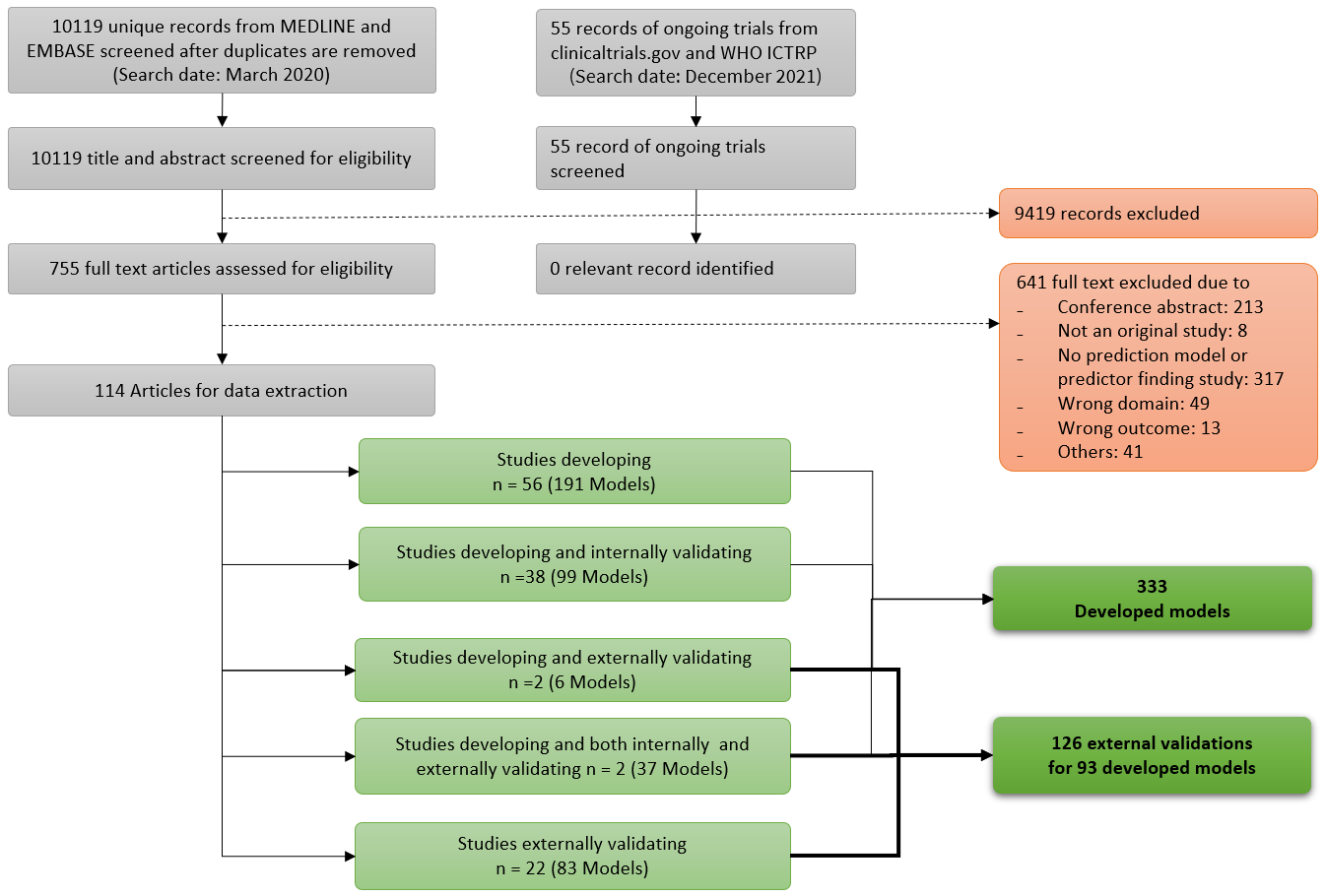

A total of 10,119 unique potentially relevant articles and 55 ongoing trials were identified. Of those, 9,419 were excluded based on title and abstract screening, leaving 755 articles for full text screening. During full text screening, 641 articles were excluded. From the 114 remaining articles, data were extracted (Fig 1).

Fig 1. Study flow diagram

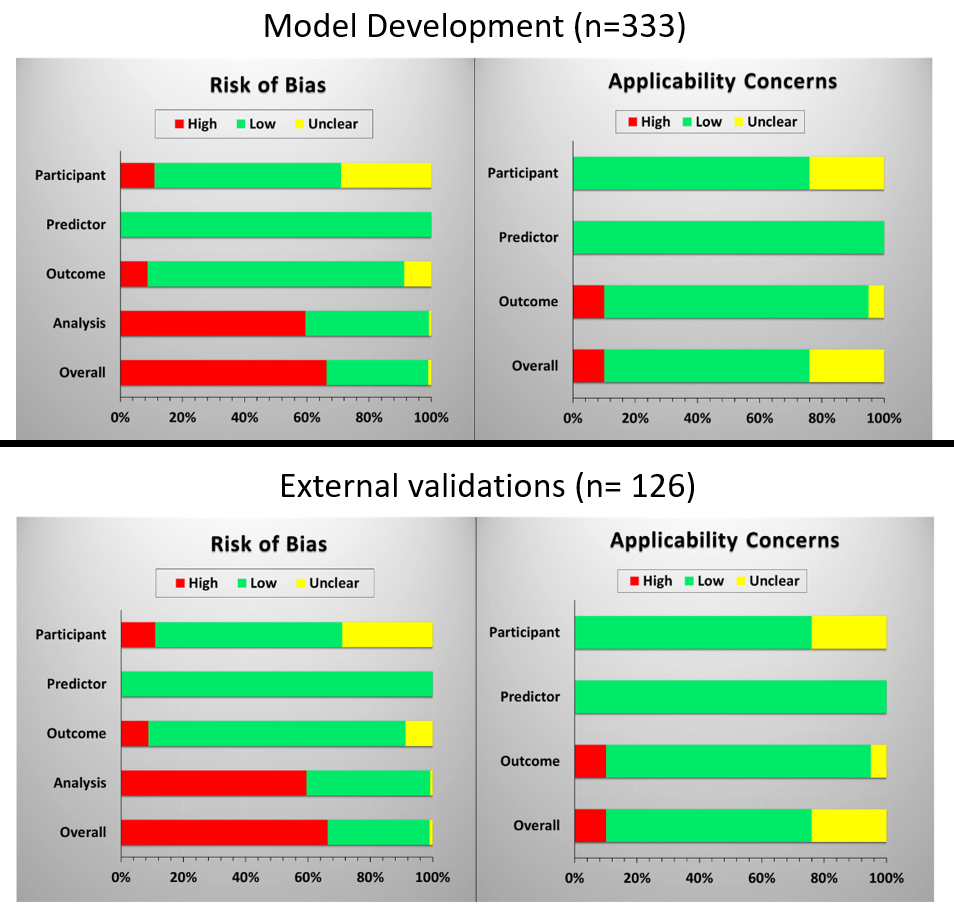

Among 333 developed models from 96 articles, 148 models aimed to predict toxicities related to salivary gland function (44%), followed by swallowing-related toxicities (18%), brain and nerve toxicities (7%) and hypothyroidism (6%). Calibration and discrimination of the apparent model were reported for 68 (20%) and 109 (33%) models, respectively. The c-statistics ranged between 0.60 and 0.98. Only 48 (14%) models from 5 articles were judged as low ROB and low concerns for applicability (Fig 2).

Fig 2. Assessment of risk of bias and applicability concerns

No external validation was performed for 244 models (73%). For the remaining 89 models and 4 additional models which were originally developed for patients with different types of cancer, 126 external validations were performed in 22 articles; most often for models that predicted outcomes related to saliva dysfunction (n=51, 40%), followed by swallowing (n=30, 24%) and hypothyroidism (n=24, 19%). The reported c-statistics after external validation ranged between 0.19 and 0.96. The overall ROB and applicability concerns were high in 67% and 10% of the external validations, respectively (Figure 2).

Six models (3 for dysphagia and 3 for hypothyroidism) were identified with ≥ 2 external validations for the same outcome as in the original development study. Those models generally showed good discriminative performance. However, information on their calibration performance was largely lacking and ROB was high. As no model was externally validated at least 5 times, we could not perform any meta-analyses of the performance of models across validation studies.